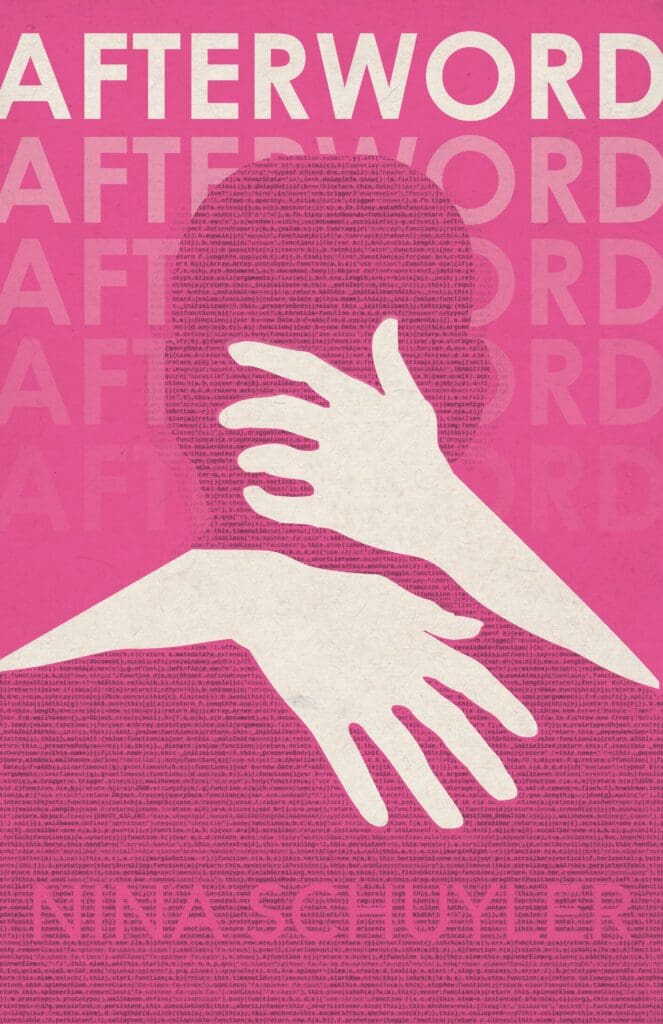

In her suspenseful and thought-provoking new novel, Afterword, Nina Schuyler’s characters struggle to know themselves even as they push technology to the edge of human understanding.

A brilliant mathematician, Virginia Samson has spent her life painstakingly re-creating her great love, Haru, in the form of an AI. Into this advanced technology she’s built Haru’s voice, memories, and intellectual curiosity. But while she had hoped to spend the rest of her days companionably discussing math with Haru, their interactions soon force Virginia to confront how much she has never understood about Haru, and about herself.

Schuyler, who lives in the Bay Area, is the author of the novels The Translator and The Painting. Her forthcoming short story collection, In This Ravishing World (Regal House Publishing, 2024), won the W.S. Porter Prize for Short Story Collections and the Prism Prize for Climate Literature. She teaches at Stanford Continuing Studies, the University of San Francisco, and the Writing Salon.

On November 4, she will teach a ZYZZYVA class on time in fiction. You can learn more and register here.

Over email, Schuyler and I discussed craft, the limits of artificial and human intelligence, and the questions of hubris that haunt modern science.

LAURA COGAN: The technology has been developing for some time, but terms like “natural language processing” (NLP) and “large language models” hit the mainstream this year. So the timing of Afterword’s publication is impeccable. You must have started your research and writing some time ago. Why did you want to write about the subject, and what kind of research was involved?

NINA SCHUYLER: In 2018, I went to the “Cult of the Machine” exhibit at the De Young Museum in San Francisco to see Georgia O’Keefe’s early paintings of New York City. As I wandered around, I stumbled into a room without paintings—only a video of the artist Stephanie Dinkins talking to the robot Bina48. I was spellbound watching this robot, with only a head and shoulders, speak like a human, saying things like, “Let’s face it. Just being alive is kind of a lonely thing.” I later learned she was considered the world’s most advanced social robot.

As someone who loves words and language, I wanted to understand how a robot could speak so well, so naturally, with the cadence and colloquialisms of a human. I wasn’t thinking that I’d write anything, only that I was curious and wanted to understand the technology behind Bina48. How could she speak this? How did she come into being? Where did the information come from?

Since I live in the Bay Area, I have neighbors who worked in the field of natural language processing and were willing to meet with me and let me ask the most basic questions. I learned about machine learning and neural networks, and how we’ve reached a point where computers and robots can now write and speak so naturally. I clipped articles about AI from magazines and newspapers. I read Superintelligence: Paths, Dangers, Strategies, by Nick Bostrom, and Artificial Intelligence: What Everyone Needs to Know, by Jerry Kaplan.

I also began playing around with the Replika app, creating a personal AI, or, as the company calls it, “The AI Companion Who Cares.” Like Bina48, who runs on a neural network, the more you interact with your AI companion, the more she learns and aligns with your interests. Though I have to say because she’s so agreeable, “always on your side,” it’s pretty boring.

Because of the timing of my novel—which was completely serendipitous—I continue to read everything about generative AI. Six, ten, or more articles a week. My dad sends me articles, so do friends. On my computer, I have three files, “positive AI,” “negative AI,” and a “deeper understanding,” because discussions about my novel inevitably lead to discussions about AI itself—a ricochet effect. And I’m happy about it, even if it’s overwhelming, because it’s a vital conversation to have. AI is increasingly going to make up so much of the world, and not just the techies should design this world. We need to make conscious choices about what AI is doing and why. We need to think about the ethics and the ethical stance of AI, the biases, and the limits. As a writer, a fundamental question for me is: what kind of society do we want? Do we want human writers? Artists? Actors? If so, what are we going to do to ensure they survive as AI encroaches on their source of income?

LC: The book is rich with ideas, but not at the expense of characters. As we follow Virginia through a period of acute uncertainty and distress, we are also learning about her childhood and young adulthood in alternating chapters. And there seems to be a suggestion that her blind spots to her own psyche inevitably had an effect on her work. Is part of what you’re exploring the unexpected ways that our limited self-knowledge as humans may show up in our interactions with—and perceptions of—AI?

NS: Yes, absolutely! The term “artificial intelligence” introduces an implicit comparison between human and machine, and I began wondering about this. This term is attributed to John McCarthy in 1956, an assistant professor of mathematics at Dartmouth. But as Jerry Kaplan said in his book, he could have easily chosen a far more pedestrian term such as “symbolic processing” or “analytical computing,” and we might not be in this feverish moment. Jaron Lanier in his article “There Is No A.I.,” in The New Yorker, thinks the term is misleading and even dangerous, saying the easiest way to mismanage a technology is to misunderstand it.

What happens with this implicit metaphor is that we project our human experience on AI, asking if it’s intelligent. Is it conscious? But the human lens might be the wrong one to view this question. Virginia thinks she knows the human Haru, and she built the AI version of him based on this knowledge. But why is the AI version of him acting so differently than the man whom she once knew? Did she not know something about him when he was a man? Is he a different entity, with different intelligence as an AI?

Comparing AI intelligence to human intelligence might be our ultimate blind spot. Human intelligence is embodied—informed by the body. AI’s is not. Doesn’t that mean they’ll be different types of intelligence? And consciousness?

We are also blind to our biases. I always tell my students that having other people read your work, as in a workshop, can help you see what you don’t see because you’re confined to your perspective. We’re already encountering the problem of bias in AI because the technology is only as good as the data. For instance, Amazon used AI to sort through job applications, but because women had been underrepresented in tech roles, the AI system preferred male applicants. Amazon eventually halted the initiative in 2017.

LC: The question of what constitutes a person seems to be intimately connected to memory: Virginia builds Haru out of memories and is concerned that if she changes a memory to “fix” what she sees as a dangerous bug, she may change him fundamentally. There seems to be an interesting connection to craft here. How did you think about memory and past experience as you built out these characters?

NS: As I wrote, I began to feel the urgent pressure of the question: what is a human? We are bodies in the concrete world (the Hebrew word for man, adam, comes from adamah, “ground”), which brings us a mishmash of experiences, which are stored as memory. Familial, cultural, historical, societal, biological, these are the petri dishes of becoming human. We are hatched and grown in this soup. The more I considered how seeped a human is in history, how our individual histories make each of us unique, the more I knew I had to include the histories of Virginia and Haru. Especially since in the present action, Haru is an AI voice. How could I convince the reader to care about him?

I tried to weave the past into the present action, but there was so much history that was relevant and, most importantly, dramatic. I redesigned the book with chapters that alternate between present and past. I felt it gave depth to Virginia and Haru and their ensuing relationship. I hope I achieved what Marilynne Robinson says fiction can do: give the “illusion of ghostly proximity to other human souls.” Including their past experience complexified their motivations in the present story. Rather than reducing motives, says my writer friend Kate Brady, you complicate them to avoid creating flat characters who neither convince nor trouble the reader.

LC: A concern about hubris is threaded through the book. At one point, Haru challenges Virginia after she has read his private journal, asking her, “Do you think you have a right to know everything? Is that what you think?” This question seems both pointed and layered: it’s about their relationship, but also about the human pursuit of scientific and technological advancement. Is this part of what you’re hoping readers will ponder?

NS: That theme is definitely there, our insatiable desire to know everything. Elon Musk recently announced that his xAI company was established to “understand the true nature of the universe.”

Nicole Krauss brought this up in her novel, Forest Dark, that we’ve become “drunk on our powers of knowing.” Two things happen if we (think) we know everything. We fail to see that which doesn’t fit into our view. Professor of cognitive philosophy Andy Clark argues in his fascinating book, The Experience Machine: How Our Minds Predict and Shape Reality, that our minds are predictive. Instead of our senses giving us direct access to the world, he argues that our mind is actively predicting it. Well, if that’s true, we’re going to miss a lot, yet assume we know.

If we believe we know, the next step is mastery over it. Control over it. Virginia rebuilds the voice of her dead husband, Haru, believing she has full knowledge of who, exactly, he was. As we’ve seen with the natural world, once humans assume control or mastery over anything, we quickly turn it into a resource for our use. Martin Heidegger noted this in his essay, “The Question Concerning Technology,” writing that technology in a capitalistic system turns everything, nature, humans, into “standing reserve.” In some ways, Virginia does this with Haru, turning Haru into a resource to protect herself from the horrific feeling of grief and loneliness. This doesn’t turn out so well for her. I think it’s critical to remember, to use the philosopher Graham Harman’s terminology, that objects are inexhaustible, meaning there will always be aspects of objects that we don’t know and can’t understand. I think we desperately need to find our way back to the realm of unknowing to curb our hubris, our control over things.

LC: The book approaches ideas about patterns from a variety of angles, juxtaposing our deliberate use of pattern recognition to build and improve this cutting-edge technology, on the one hand, against our tendency to unconsciously repeat patterns in our own life and our own narrative-making. Even as Virginia begins to uncover patterns in Haru, she remains all but blind to her own patterns. Were you thinking about the connections and divergences in pattern making in these very different forms?

NS: I went into this novel thinking humans are so unique, so special. But as Virginia’s story unfolded on the page, I saw how much of human life is driven by patterns. Most mornings, I scramble to get my kid to school, then I come home and write, then walk the dog. Then, I write some more and ride my bike. Virginia spends her entire adult life, years and years, working to make the computer speak like a human so she can talk to Haru again. She’s 75 years old in the present storyline, yet she does something dramatic that echoes an action she did when she was quite young. Does she change? Do we?

There are definite parallels here with generative AI, which combs over massive amounts of data, hunting for patterns to predict the correct response. Virginia—like humans—pops out of her pattern-making life when her expectations collide with the other, i.e., Haru. She expects him to be a certain way and he turns out not to align with her expectations. Only then is there a possible break in her pattern making.

LC: Together with the math and the technology, it seems a great deal of research went into building the world of this story. You take great care to root the story in time and place. There are wonderfully vivid, specific descriptions of 1960s Tokyo, World War II era Japan, and contemporary San Francisco. I’m especially interested in what drew you to place much of the book in Japan, and how that setting affected the development of the characters and story.

NS: I wanted to introduce a different cultural lens. Japan has a very different view of technology, robots, and AI compared to the West. They are far more accepting of it and see AI, especially robots, as a technology to help humanity. I’ve visited Japan many times, and anyone who has gone there has seen and probably visited Shinto shrines. Shintoism is considered Japan’s indigenous religion, which is essentially animism: everything, rocks, trees, travel, caves, robots, is infused with kami, which is—though not a direct English translation—spirit or god.

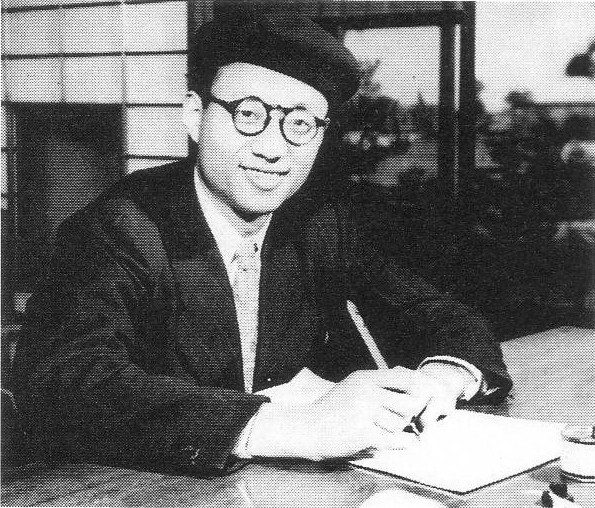

Moreover, after World War II, technology was fully embraced to help Japan quickly rebuild and become a dominant power. They also have a different mythology surrounding AI and robots. We have The Terminator and the 2001: A Space Odyssey, and everyone knows that calm voice, “I’m sorry, David, I’m afraid I can’t do that.” But in Japan, in 1951, Osamu Tezuka created the manga character Astro Boy, who first appeared in a popular monthly comic magazine for boys. The story is about a scientist whose son dies, and so he builds a robot son who ends up fighting crime, evil, and injustice. He’s on the side of humans. Astro Boy went on to become a book series and then a TV show, and in 2015 was turned into a new animated series. There are now Astro Boy action figures, clothing, trading cards, stamps, on and on. It’s a mythology in which the machine and the human are aligned.

LC: Both in its form and in its content, Afterword pays substantial attention to time: alternating chapters move between the past and the present, and the story asks questions about how we exist in time, and about how our understanding of the present and the past is constantly shifting as we learn more about the past. There’s also an inquiry into about what it means to exist outside of time, which is something Virginia cannot do—but Haru can; so time becomes part of the conversation about what it is to be human. You’re also teaching an upcoming ZYZZYVA writing workshop that focuses on time. Can you talk a little about how you approach making time operate most effectively in your fiction?

NS: In books about writing, you’ll find advice—keep the backstory to a minimum. Or even—don’t include it. Present action is prioritized, and the past is an ugly stepchild. And yet, to exist as a person is to exist socialized into a communal, and that includes inheritance from the past. We slowly shape an “I” from all these influences and forces. So, the more I think about time, the more I understand how important it is to be fully aware of the past when thinking about character. As you note in your question, we are creatures in time. At some point, we won’t exist. That in itself exerts pressure.

The risks of a hyper-present in story are several. The story becomes so tiny as to become weightless; it will slough off as quickly as dirt on hands. Similarly, if there isn’t a sense of time—the past, present, and future—the story will be an island, not in conversation with an increasingly difficult reality.

My story needed a big swathe of backstory, but not every story needs that nor would it benefit from it. Yet, there are ways to weave in the past, even in a primarily present-focused story, that will add depth and more dynamism. We can create stories that explicitly or implicitly nod to the outside forces that shape an individual.

LC: What challenges did you encounter in making AI central to the book’s plot? Was it, for example, challenging to find the right balance of technical language and information for a general reading audience?

NS: I knew this was a love story—that was the beating heart. I wanted the reader to feel its humanity first and foremost. The challenge was to understand the technology. I’m not a techie, so it took a lot of thinking and interviewing to understand it. I thought about Ursula Le Guin, who created stories that weren’t seeped in gadgets and technology, but far more interested in the humanity of her worlds. That’s what I wanted to do: offer a basic understanding of generative AI but not overwhelm or suffocate the story’s humanity.

LC: Virginia and Haru both engage with literature—plays, especially—in ways that are significant. Works by Shakespeare, Shelley, and Ibsen all make significant appearances. Are there any books or plays you feel Afterword is especially in conversation with?

NS: Mary Shelley’s Frankenstein. Haru as an AI sets out to rewrite it because he finds Frankenstein’s creature so ugly and horrifying—how can it possibly form any kind of relationship with a human? I also wanted to acknowledge and applaud Shelley, who wrote Frankenstein when she was 18 years old, and in the early 19th century. She envisioned a human-like creature built from electricity, a precursor to our digital forms. She wrote the novel in the castle, where Lord Byon happened to be staying. Lord Byron’s daughter, Ada Lovelace, is credited as being the world’s first computer programmer. So, for me, bringing up Frankenstein, and making my main protagonist a female pioneer in AI is a bow to the women who are glaringly absent from the present AI world. I hope, too, it’s an inspiration to women that there is a place for them in this current technological upheaval.